This Chip Changes Everything Forever

Introduction: The End of the Road and a New Beginning

For over half a century, the relentless march of technological progress has been powered by a single, unwavering principle: Moore’s Law. The prediction that the number of transistors on a microchip would double approximately every two years has guided the design of every processor in our computers, smartphones, and data centers. However, this era is drawing to a close. We are approaching the physical atomic limits of silicon, and the astronomical costs and energy demands of building ever-smaller transistors are becoming prohibitive, especially for the age of artificial intelligence. But just as one chapter ends, a revolutionary new one begins. A new class of processor, fundamentally different from anything that has come before, is emerging from research labs to redefine the very nature of computation. This isn’t a slightly faster CPU or a more powerful GPU. This is a neuromorphic computing chip—a processor that mimics the architecture and operation of the human brain. This chip doesn’t just improve upon the past; it renders it obsolete, promising to solve the most critical bottlenecks of the 21st century and change everything from the future of AI to the sustainability of our digital world.

A. The Brick Wall: Why Von Neumann Architecture is Failing AI

To understand why neuromorphic chips are revolutionary, we must first understand the fundamental limitation of the computers we use today. Virtually all modern computers are based on the Von Neumann architecture, a model conceived in the 1940s by John von Neumann.

A. The Von Neumann Bottleneck: This architecture separates the Central Processing Unit (CPU) from the memory (RAM). To perform a calculation, the CPU must constantly shuttle data back and forth across a communication channel (the bus) between the processor and the memory. This process is incredibly inefficient and creates a fundamental bottleneck. The CPU, no matter how fast, spends a vast amount of its time waiting for data, much like a brilliant chef stuck in a tiny kitchen who has to run to a distant warehouse for every single ingredient.

B. The Energy Inefficiency Crisis: This constant data shuttling is extraordinarily energy-intensive. Training a single large AI model like GPT-3 can consume enough electricity to power hundreds of homes for a year. As we push for more powerful AI, scaling this inefficient architecture is becoming environmentally and economically unsustainable. We are building AI that is simply too expensive to run at scale.

C. The Mismatch for Parallel Tasks: The Von Neumann architecture is inherently sequential—it excels at executing a long list of instructions one after another. However, the core computations of neural networks—matrix multiplications—are massively parallel. While GPUs help by having thousands of cores, they are still built on the same fundamental, inefficient Von Neumann foundation, forcing a parallel problem into a sequential framework.

B. The New Paradigm: How Neuromorphic Chips Actually Work

Neuromorphic computing abandons the 1940s blueprint and instead uses the human brain—the most efficient, powerful computer in the known universe—as its inspiration.

A. The Biological Blueprint: The Human Brain

The brain operates on a completely different set of principles:

* Massive Parallelism: Your 86 billion neurons are constantly firing and working in parallel, unlike a CPU’s sequential processing.

* Co-located Memory and Processing: In the brain, memory (synapses) and processing (neurons) are physically intertwined. There is no bottleneck because there is no separation.

* Event-Driven Operation (Spiking): Neurons are largely silent. They only “spike” or fire when a certain threshold of input is reached. This event-driven, sparse activity is incredibly energy-efficient, unlike a CPU that is constantly clocking and drawing power whether it’s doing useful work or not.

B. The Engineering Translation: Building a Silicon Brain

Neuromorphic chips like Intel’s Loihi 2 and IBM’s TrueNorth translate these biological principles into silicon.

* Artificial Neurons and Synapses: The chip is a network of artificial neurons (computational units) connected by artificial synapses (memory elements). These components are physically interwoven on the chip, eliminating the Von Neumann bottleneck.

* Spiking Neural Networks (SNNs): Instead of processing data in continuous streams, neuromorphic chips use SNNs. Information is encoded in the timing and frequency of discrete electrical spikes, much like the brain. If there’s no input, there are no spikes, and the circuit consumes minimal power.

* Asynchronous Operation: The chip does not rely on a global clock that synchronizes all operations. Different parts of the chip can work on different problems at their own pace, leading to far greater efficiency.

C. The Real-World Impact: A Revolution Across Industries

The implications of moving to a brain-inspired computing model are not theoretical; they will unlock capabilities that are impossible with today’s hardware.

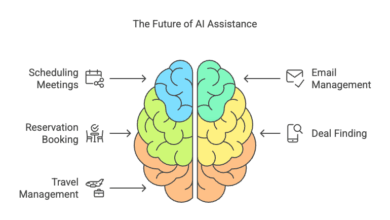

A. Ultra-Low Power AI at the Edge

The most immediate application is in “edge” devices—sensors, cameras, drones, and mobile phones that operate away from the cloud.

* Example: A security camera with a neuromorphic chip could run complex object and person recognition locally, 24/7, using only the power from a small solar cell. It would only send an alert (a “spike” of data) to the cloud when it detects an intruder, saving immense bandwidth and energy. Today’s cameras either send all footage to the cloud (expensive and power-hungry) or have limited, battery-draining onboard processing.

B. Real-Time Sensory Processing and Robotics

Neuromorphic chips can process sensory data (sight, sound, touch) with a speed and efficiency that matches biological systems.

* Autonomous Vehicles: A car could process input from LiDAR, cameras, and radar simultaneously in real-time, reacting to a sudden obstacle with the speed of a human reflex, but with superhuman perception.

* Advanced Robotics: Robots could achieve a new level of dexterity and adaptability, learning to manipulate unfamiliar objects and navigate dynamic, unstructured environments like a human would, powered by a chip that fits in the palm of your hand.

C. Revolutionizing Scientific Discovery

The ability to run complex simulations with extreme energy efficiency will transform scientific fields.

* Climate Science: Researchers could run hyper-detailed, real-time climate models to predict regional weather patterns and the effects of climate change with unprecedented accuracy.

* Drug Discovery: Scientists could simulate the interaction of millions of molecular compounds with a target protein in a fraction of the time and at a fraction of the energy cost of today’s supercomputers.

D. The Competitive Landscape: Who is Building the Future?

The race to commercialize neuromorphic computing is heating up, with tech giants and startups vying for leadership.

A. Intel’s Loihi 2: The Research Pioneer

Intel has been a frontrunner, with its second-generation Loihi 2 chip. It demonstrates remarkable efficiency, performing certain optimization and sensory processing tasks up to 1,000 times faster and 10,000 times more efficiently than conventional processors. Intel is making these chips available to a global research community to foster an ecosystem and discover new applications.

B. IBM’s NorthPole: A Breakthrough in Architecture

IBM’s recent NorthPole chip is a related architectural marvel. While not a pure neuromorphic chip, it obliterates the Von Neumann bottleneck by integrating memory directly into its cores. The result is a chip that can run AI-based image recognition 22 times faster than any existing commercial chip, with a fraction of the energy consumption. It is a clear signal that the industry is moving decisively away from the old model.

C. Startups and Academic Research

A vibrant ecosystem of startups (like BrainChip with its Akida processor) and university labs worldwide are pushing the boundaries of neuromorphic materials, algorithms, and applications, ensuring rapid innovation in the coming years.

E. The Challenges on the Path to Ubiquity

Despite the immense promise, widespread adoption faces significant hurdles.

A. The Software and Programming Paradigm Shift

How do you program a brain? Traditional coding languages like C++ and Python are useless for neuromorphic chips. A completely new software stack, programming models, and algorithms (based on Spiking Neural Networks) need to be developed and standardized. This is a monumental task that requires retraining a generation of software engineers.

B. Precision vs. Efficiency Trade-Off

The brain is noisy and imprecise, yet it works brilliantly. Neuromorphic chips often trade the exact numerical precision of traditional CPUs for massive gains in efficiency and speed. While this is perfect for sensory and cognitive tasks, it is not suitable for applications requiring perfect mathematical accuracy, like running a spreadsheet or a bank’s ledger.

C. Manufacturing and Integration

Fabricating these radically new chip architectures at scale and integrating them into existing technology ecosystems will be a complex and costly engineering challenge for the semiconductor industry.

Conclusion: The Dawn of a New Computational Epoch

The significance of the neuromorphic chip cannot be overstated. It represents a fundamental paradigm shift as profound as the move from the vacuum tube to the transistor. For decades, we have been trying to solve 21st-century problems with mid-20th-century computer architecture. We have been building faster and faster horses, when what we needed was an automobile.

This chip changes everything because it redefines what is possible. It promises an end to the unsustainable energy appetite of our data centers, enabling a truly intelligent Internet of Things, and unlocking forms of AI that are adaptive, contextual, and efficient. It will allow us to embed human-like intelligence into the very fabric of our world, from our homes and cities to our pockets and our bodies. The transition will take years, but the direction is now clear. The age of Von Neumann is ending. The age of the silicon brain is beginning. This chip isn’t just an incremental step; it is the key that will unlock the next chapter of human technological progress.