Preserving Privacy in the AI Epoch

The rapid and pervasive integration of Artificial Intelligence into the fabric of our daily lives represents one of the most significant technological shifts in human history. From the algorithms that curate our social media feeds to the large language models that assist with our work and the computer vision systems that monitor public spaces, AI’s capabilities are growing at an exponential rate. This unprecedented power, however, comes with a profound and often underappreciated cost: the erosion of personal privacy. We are transitioning from an era of digital surveillance, where our clicks and searches were tracked, to an epoch of inferential surveillance, where AI can deduce our most intimate attributes—our health predispositions, political beliefs, emotional states, and even our future behaviors—from seemingly innocuous data. The central challenge of this new era is no longer just about who collects our data, but what they can infer from it. Preserving privacy in the age of AI is not a niche concern; it is a fundamental requirement for maintaining human autonomy, dignity, and freedom in the 21st century.

A. The New Privacy Paradigm: From Data Collection to Inferential Analytics

The threat to privacy from AI is qualitatively different from previous digital threats. It is more insidious, more powerful, and far more difficult to regulate.

A.1. The Power of Inference: Predicting the Unsaid

Modern AI systems, particularly large language models and sophisticated machine learning algorithms, do not need explicit information to build a detailed profile of an individual.

-

Behavioral Prediction: An AI can analyze your writing style, your purchase history, and your music preferences to infer your personality type, your socioeconomic status, and your potential political leanings with startling accuracy.

-

Health and Mental State Deduction: Researchers have demonstrated that AI can predict the onset of conditions like Parkinson’s disease from subtle patterns in keyboard typing dynamics or identify depression from linguistic patterns in social media posts, long before a formal diagnosis is made.

-

The Digital Panopticon: This creates a world where you are constantly being assessed and categorized based on your digital exhaust—the trail of data you leave behind through mundane, daily activities. This is no longer about tracking what you do; it’s about predicting who you are and what you might do next.

A.2. The Pervasiveness of AI Surveillance

Data collection is no longer confined to our computers and phones; it is embedded in the physical world around us.

-

Facial Recognition and Public Spaces: The proliferation of high-resolution cameras coupled with real-time facial analysis AI has effectively ended anonymity in public spaces. Cities and private companies can track your movements, associations, and even your emotional reactions as you walk down the street.

-

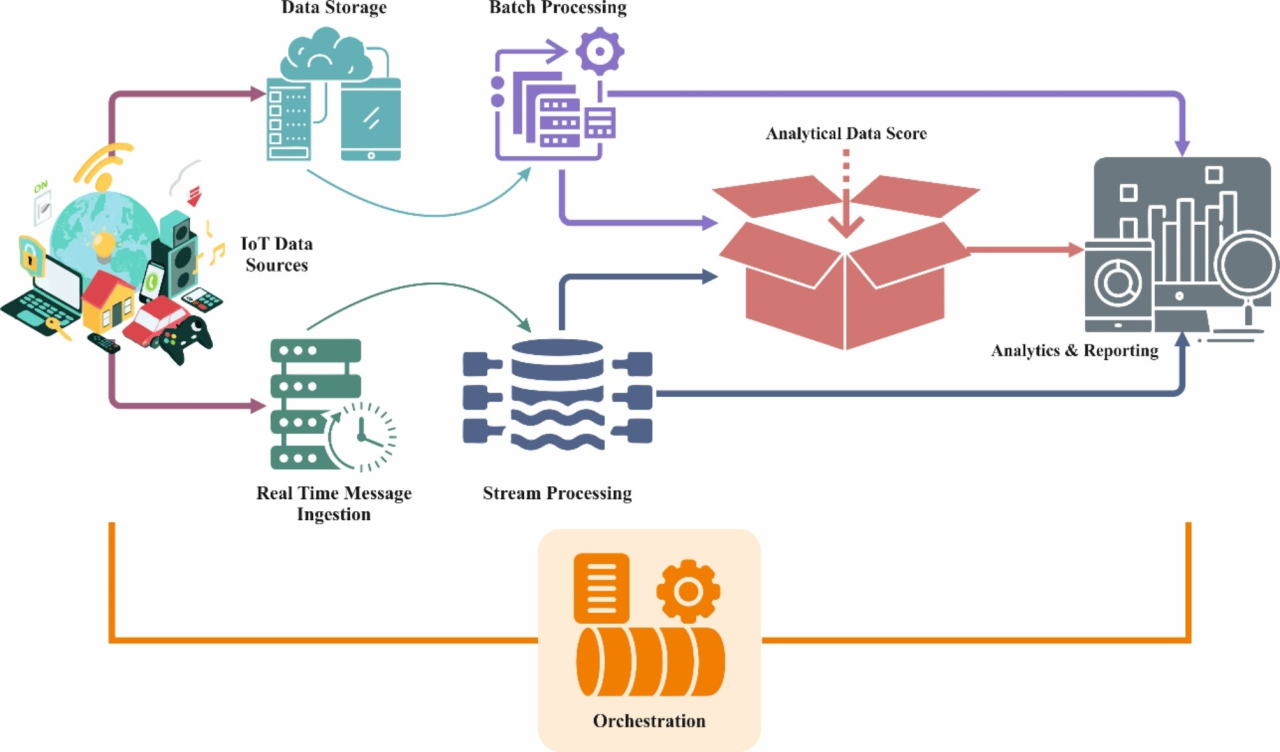

The Internet of Things (IoT) as a Sensor Network: Your smart TV, voice assistant, connected car, and even your refrigerator are continuously collecting ambient data. This vast, distributed sensor network provides a constant stream of information about your home life, habits, and conversations, feeding the AI models that seek to understand and influence you.

B. The High-Stakes Applications: Where Privacy Collides with Progress

The tension between AI’s utility and its privacy cost is most acute in several critical domains.

B.1. Healthcare and Medical AI

The potential for AI to revolutionize medicine is immense, but it requires access to our most sensitive personal data.

-

The Promise of Personalized Medicine: AI can analyze genomic data, medical records, and lifestyle information to create hyper-personalized treatment plans and predict individual health risks, potentially saving millions of lives.

-

The Privacy Peril: This creates a central repository of incredibly sensitive information. A data breach could expose an individual’s genetic predispositions to employers or insurers. Furthermore, the very act of collecting this data for one purpose (e.g., cancer research) could lead to its use for another, less ethical purpose (e.g., assessing insurance premiums).

B.2. The Workplace and Employee Monitoring

AI-powered analytics are transforming employer-employee relationships, often tilting the balance of power dramatically.

-

Productivity Optimization Tools: Software can now track keystrokes, mouse movements, application usage, and even analyze email tone and content to gauge employee productivity and engagement.

-

The “Bossware” Epidemic: More invasive systems use camera-based AI to monitor attentiveness during remote meetings or to flag “suspicious behavior.” This creates a culture of constant surveillance, eroding trust and creating immense psychological pressure on workers.

B.3. Law Enforcement and Predictive Policing

The use of AI by governments and law enforcement agencies poses a direct threat to civil liberties.

-

Predictive Policing Algorithms: These systems analyze historical crime data to forecast where future crimes are likely to occur. However, they often perpetuate and amplify existing biases, leading to over-policing in minority neighborhoods and creating a feedback loop of discrimination.

-

Social Credit Systems: While most advanced in China, the concept of using AI to aggregate data from various sources to assign citizens a “trustworthiness” score is a dystopian culmination of inferential analytics. It represents a system where privacy loss directly translates to a loss of social and economic rights.

C. The Technical and Regulatory Defense Arsenal

Combating these threats requires a multi-pronged approach, combining cutting-edge computer science with robust legal frameworks.

C.1. Technological Solutions for Privacy Preservation

Fortunately, the field of AI itself is developing tools to protect privacy.

-

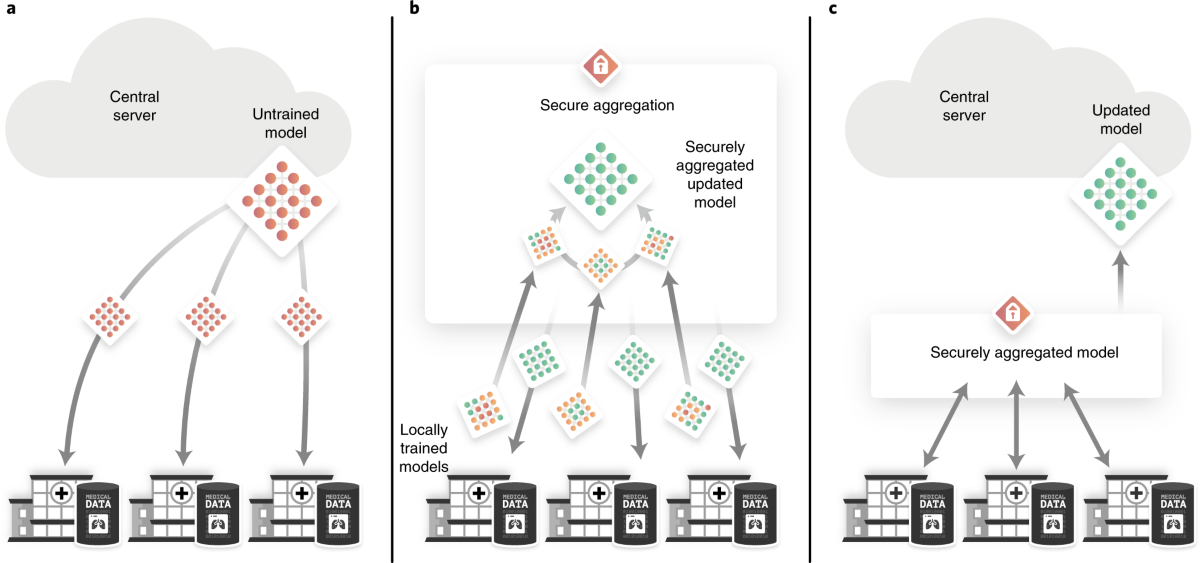

Federated Learning: This is a decentralized approach to training AI models. Instead of sending raw user data to a central server, the model is sent to the user’s device (e.g., their phone). The model learns locally from the user’s data, and only the anonymous, aggregated model updates—not the data itself—are sent back to the central server. Your data never leaves your possession.

-

Differential Privacy: This is a mathematical framework for adding a carefully calibrated amount of “statistical noise” to datasets or query results. It ensures that the output of an analysis remains virtually unchanged whether any single individual’s data is included in the dataset or not, thus making it impossible to reverse-engineer information about a specific person.

-

Homomorphic Encryption: This is a revolutionary form of encryption that allows computations to be performed directly on encrypted data without ever needing to decrypt it. A cloud server could, for example, analyze your encrypted medical records and provide a diagnosis without ever seeing the underlying sensitive information.

C.2. The Evolving Regulatory Landscape

Technology alone is not enough; strong legal guardrails are essential.

-

The GDPR (General Data Protection Regulation): The European Union’s landmark legislation has set a global standard. Its principles of “data protection by design and by default,” the requirement for explicit consent, and the powerful “right to be forgotten” provide a strong foundation for privacy rights in the AI age.

-

The AI Act and Other Emerging Frameworks: The EU’s AI Act is the world’s first comprehensive attempt to regulate artificial intelligence directly. It takes a risk-based approach, banning certain AI applications (e.g., social scoring) and imposing strict transparency and oversight requirements on “high-risk” AI systems used in critical areas like employment and law enforcement.

-

The Challenge of Enforcement and Global Harmonization: A major hurdle is the global nature of the internet and the power of multinational tech corporations. Regulations in one jurisdiction can be circumvented by operating from another. Achieving a harmonized, global standard for AI privacy is one of the great political challenges of our time.

D. The Individual’s Role: Empowerment in the Digital Age

While systemic solutions are crucial, individuals are not powerless. Cultivating “privacy hygiene” is an essential skill for the 21st century.

D.1. Practical Steps for Personal Data Defense

There are actionable steps everyone can take to reclaim a measure of their digital privacy.

-

Audit and Fortify Your Digital Footprint: Regularly review the privacy settings on all social media platforms and online services. Use a password manager and enable two-factor authentication everywhere it is offered. Assume that default settings are designed for data collection, not privacy.

-

Embrace Privacy-Enhancing Technologies: Use a reputable VPN (Virtual Private Network) to obscure your online activity from your internet service provider. Consider using privacy-focused browsers like Brave or Firefox with anti-tracking extensions. Use search engines like DuckDuckGo that do not create personalized profiles.

-

Practice Mindful Sharing: Before posting or providing information online, pause and consider the long-term implications. Could this data be used to infer something about me that I don’t want to be known? Teach children about digital literacy and the permanence of their online actions from an early age.

D.2. The Societal Conversation and Ethical Imperative

Ultimately, preserving privacy is a collective societal choice.

-

Demanding Corporate Accountability: Consumers must pressure companies to adopt ethical data practices, be transparent about how AI is used, and offer genuine privacy-respecting alternatives.

-

The Ethical Design Imperative: The next generation of technologists must be educated to build ethics and privacy into the foundation of their products, not as an afterthought. The principle of “Privacy by Design” must become an industry standard.

Conclusion: Forging a Future of Dignity and Autonomy

The age of AI does not have to be the end of privacy. It can be the beginning of a new chapter where we consciously choose to build systems that respect human dignity by design. The path forward requires a delicate and continuous balancing act—harnessing the incredible benefits of AI for health, productivity, and innovation while fiercely defending the fundamental human right to a private mental and personal space. The solutions will not be purely technical or purely regulatory; they must be a synthesis of both, guided by a strong and unwavering ethical compass. The question is not whether AI will continue to advance, but what kind of society we will become as it does. Will we be a society of transparent individuals, constantly profiled and predicted, or one of empowered citizens who use technology as a tool for enhancement, not subjugation? The answer to that question is the most important algorithm we have yet to write.

Tags: AI privacy, data protection, federated learning, differential privacy, GDPR AI, ethical AI, surveillance capitalism, data ethics, personal data security, AI regulation