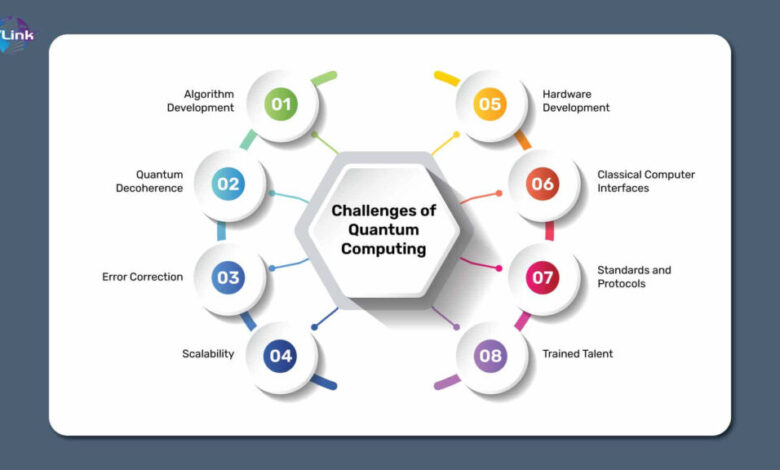

Quantum Computing’s Scalability Challenge Intensifies

For decades, quantum computing has existed primarily in the realm of theoretical physics and scientific speculation, a fascinating promise of a future computational revolution. However, the landscape is shifting at an unprecedented pace. The abstract race to build a useful quantum computer is now a tangible, high-stakes global competition involving the world’s most powerful tech corporations, ambitious startups, and national governments. The central narrative is no longer if a fault-tolerant quantum computer will be built, but when, by whom, and what technology will ultimately power it. This intense competition is causing the field to “heat up” in every sense of the word—from the blistering pace of innovation and soaring investments to the literal, profound physical challenge of keeping quantum processors cold enough to function. We are entering a critical phase where the foundational work of the past is colliding with the immense practical challenges of scaling, pushing the boundaries of science, engineering, and computer science in a unified effort to unlock one of humanity’s most transformative technologies.

A. The Fundamental Quantum Advantage: Beyond Classical Bits

To understand the current race, one must first grasp the fundamental shift that quantum computing represents. It is not merely a faster version of today’s computers; it is a different paradigm altogether.

A.1. Qubits and the Power of Superposition

A classical computer bit is binary, existing as either a 0 or a 1. A quantum bit, or qubit, leverages the quantum mechanical principle of superposition.

-

Beyond Binary States: A qubit can exist in a state of 0, 1, or any probabilistic combination of both simultaneously. This means that two qubits can represent four states (00, 01, 10, 11) at the same time. Three qubits can represent eight states, and so on.

-

Exponential Scaling: This relationship is exponential. While 300 classical bits can represent one number, 300 perfectly stable qubits could, in theory, represent 2³⁰⁰ numbers simultaneously—a figure that exceeds the number of atoms in the known universe. This parallelism is the source of quantum computing’s potential speedup for specific problems.

A.2. Entanglement: The “Spooky” Correlation

Another critical quantum phenomenon is entanglement, which Albert Einstein famously referred to as “spooky action at a distance.”

-

Inseparable Link: When qubits become entangled, the state of one qubit becomes intrinsically linked to the state of another, no matter the physical distance between them. Measuring one qubit instantly determines the state of its entangled partner.

-

Computational Power: Entanglement allows qubits to work in a highly coordinated fashion. Complex computations can be performed across a multi-qubit system in a way that is impossible for classical computers, where bits operate independently.

A.3. The Quantum Supremacy and Advantage Milestone

The field has recently celebrated critical proof-of-concept achievements.

-

Quantum Supremacy: This term was coined to describe the point where a quantum computer performs a specific, perhaps esoteric, calculation that is practically impossible for even the world’s most powerful classical supercomputer. Google’s claim of achieving this in 2019 with its 53-qubit Sycamore processor was a landmark moment, despite subsequent debates about classical simulation optimizations.

-

Quantum Advantage: This is the more commercially significant milestone. It refers to a quantum computer solving a practically useful problem faster, cheaper, or more accurately than the best classical alternative. The race is now fiercely focused on achieving a clear, uncontested quantum advantage in areas like drug discovery or financial modeling.

B. The Hardware Arms Race: A Multi-Pronged Path to Scalability

There is no consensus on the best way to build a quantum computer. The current “heating up” of the field is characterized by several parallel and fiercely competitive hardware approaches.

B.1. Superconducting Qubits: The Current Frontrunner

Pioneered by companies like Google and IBM, this approach is currently the most advanced.

-

How It Works: These qubits are fabricated from superconducting metals and require cooling to temperatures colder than deep space (around 15 millikelvin) to operate. They are controlled by microwave pulses.

-

Strengths and Trajectory: The technology benefits from leveraging advanced semiconductor fabrication techniques. IBM’s roadmap, aiming for over 4,000 qubits by 2025 and beyond 10,000, exemplifies the aggressive scaling in this camp. The focus is on improving qubit connectivity and reducing error rates through better materials and control systems.

-

The Scaling Challenge: The primary hurdle is the immense and complex cryogenic infrastructure required. As the number of qubits grows, managing the wiring, heat load, and physical space inside a dilution refrigerator becomes a monumental engineering challenge.

B.2. Trapped-Ion Qubits: The Precision Contender

Companies like IonQ and Honeywell are pursuing this highly precise alternative.

-

How It Works: Individual atoms (ions) are trapped in place by electromagnetic fields in a vacuum. Quantum information is stored in the internal energy states of these ions, and lasers are used to manipulate and entangle them.

-

Inherent Advantages: Trapped-ion qubits are virtually identical to one another, leading to very low error rates and high “gate fidelities” (the accuracy of quantum operations). They are also naturally interconnected, as any ion can interact with any other ion in the chain via their collective motion.

-

The Scaling Challenge: The main difficulty lies in controlling ever-longer chains of ions and speeding up the gate operations, which are typically slower than those in superconducting systems.

B.3. Topological Qubits: The Dark Horse

Microsoft is betting heavily on this theoretically robust but experimentally elusive approach.

-

The Conceptual Leap: Instead of storing information in a single particle, topological qubits rely on the collective state of a system of particles—specifically, anyons. The quantum information is stored in the braiding of their worldlines in spacetime, making it inherently protected from local noise.

-

The Major Advantage: The primary benefit is fault-tolerance. Because the information is non-local, it is far less susceptible to the decoherence that plagues other qubit types. This could dramatically reduce the overhead of error correction.

-

The Immense Hurdle: The challenge is fundamental: scientists have yet to definitively create and control a topological qubit in the lab. It remains a high-risk, high-reward research path.

B.4. Other Promising Architectures

The race includes other innovative approaches, such as:

-

Photonic Quantum Computing: Using particles of light (photons) to transmit quantum information. This is promising for quantum communication and certain types of computations, especially at room temperature.

-

Cold Atom and Neutral Atom Qubits: Using optical tweezers to trap neutral atoms in arrays, offering a highly flexible and scalable geometry for arranging qubits.

-

Semiconductor Spin Qubits: Leveraging the spin of an electron or nucleus in a semiconductor material (similar to classical chips), which could benefit from existing silicon manufacturing infrastructure.

C. The Software and Algorithmic Layer: Building for a Quantum Future

Hardware is only half the story. A parallel explosion of activity is occurring in quantum software, algorithms, and cloud access.

C.1. The Algorithmic Toolkit

Researchers have identified specific classes of problems where quantum computers are expected to shine.

-

Quantum Simulation: The most natural application. Simulating quantum systems (like complex molecules for drug discovery or new chemical catalysts) is exponentially hard for classical computers but a natural fit for a quantum machine. This could revolutionize materials science and pharmaceuticals.

-

Optimization Problems: From streamlining global logistics and financial portfolio management to optimizing neural network training, many complex optimization tasks could see dramatic speedups with specialized quantum algorithms.

-

Cryptography and Factorization: Shor’s algorithm, a famous quantum algorithm, could break widely used public-key encryption systems like RSA. This threat is driving the parallel field of post-quantum cryptography—developing new encryption methods that are secure against quantum attacks.

C.2. Quantum Cloud Access and Hybrid Computing

Recognizing that fault-tolerant machines are years away, companies are adopting a pragmatic strategy.

-

Quantum Processing Units (QPUs) in the Cloud: IBM, Google, Amazon Braket, and Microsoft Azure Quantum now offer cloud-based access to their real quantum hardware. This allows researchers, students, and developers worldwide to run experiments and gain hands-on experience without owning a multi-million-dollar cryogenic system.

-

The Hybrid Quantum-Classical Model: For the foreseeable future, the most powerful approach will be to use classical computers and quantum processors in tandem. A classical computer handles the bulk of a workflow, offloading specific, calculation-intensive sub-tasks to a quantum co-processor. This model is the foundation for algorithms like the Variational Quantum Eigensolver (VQE), used in quantum chemistry.

C.3. The Programming Stack and Error Mitigation

A full software stack is being built, from low-level pulse control to high-level programming languages like Qiskit (IBM) and Cirq (Google).

-

The Daunting Challenge of Noise: Today’s quantum processors are “noisy” (NISQ devices). Qubits are fragile and lose their quantum state (decohere) due to vibrations, temperature fluctuations, and electromagnetic interference.

-

Advanced Error Mitigation: While full-scale quantum error correction requires many physical “logical” qubits to protect one “logical” qubit, researchers are developing sophisticated error mitigation techniques. These software-based methods help to extrapolate what the result of a computation would have been in a noiseless environment, effectively extending the computational power of current NISQ devices.

D. Navigating the Quantum Future: Implications and a Global Landscape

The maturation of quantum computing is not just a technical event; it carries profound economic, security, and geopolitical implications.

D.1. The Geopolitical and Economic Stakes

Nations recognize quantum technology as a strategic priority.

-

National Initiatives: China has made quantum a cornerstone of its national strategy, with massive state-backed investment. The United States has passed acts like the National Quantum Initiative Act, and the European Union has launched its Quantum Flagship program. This is widely seen as a new “Sputnik moment.”

-

The Private Investment Surge: Venture capital and corporate R&D funding are pouring into quantum startups, covering everything from hardware and software to specific applications in finance and chemistry. The total ecosystem is valued in the tens of billions of dollars and growing rapidly.

D.2. The Looming Cybersecurity Threat

The potential of a large-scale quantum computer to break current encryption is a “harvest now, decrypt later” threat.

-

Proactive Defense: Sensitive data encrypted today and recorded by an adversary could be decrypted in the future once a powerful enough quantum computer is built. This is driving a global migration towards post-quantum cryptography (PQC)—new cryptographic standards that are resistant to quantum attacks. Governments and corporations are already beginning this critical transition.

D.3. The Societal and Ethical Considerations

As with any powerful technology, quantum computing raises important questions.

-

The Accessibility Gap: Will this technology concentrate power in the hands of a few entities that can afford the immense infrastructure? Ensuring broad access through cloud platforms and fostering a diverse quantum workforce are essential to democratizing its benefits.

-

Responsible Development: The potential for quantum computing to disrupt industries, from finance to security, necessitates a conversation about ethical guidelines, regulatory frameworks, and the responsible use of this transformative power.

Conclusion: The Long and Winding Road to Quantum Utility

The field of quantum computing is undoubtedly “heating up,” charged with a palpable sense of momentum and competition. The path forward, however, remains long and fraught with immense scientific and engineering challenges. The transition from demonstrating a few dozen noisy qubits to building a fault-tolerant machine with millions of logical qubits is a journey that may take decades. It is a marathon, not a sprint.

Yet, the progress is undeniable. Each passing year brings improved qubit fidelities, more sophisticated control systems, more powerful algorithms, and a deeper understanding of the quantum world. The ultimate prize—a machine that can solve problems beyond the reach of any conceivable classical computer—is so transformative that it justifies the global effort. The heat is on, and it is this very pressure that is forging the innovations that will eventually bring the full potential of quantum computing out of the cold vacuum of the lab and into the warm light of everyday utility.

Tags: quantum computing, qubits, quantum supremacy, quantum hardware, superconducting qubits, trapped ions, quantum algorithms, post quantum cryptography, NISQ era, quantum cloud